Acknowledgement of Country

ChatLLM23 will be held on the Gadigal lands of the Eora nation. We acknowledge the Traditional Custodians of country throughout Australia and their connections to land, sea, and community. We pay our respect to their Elders past and present and extend that respect to all Aboriginal and Torres Strait Islander people. This land is the site of the oldest continuing culture in the world and the most enduring system of living knowledge.

28th APRIL 2023

9am-5pm AEST Sydney, Australia

(4pm-midnight 27th April PT San Francisco time)

Large Language Models (LLMs)

& Generative AI technologies

Risks, opportunities, ethics and social impacts of generative AI.

This event is now finished

Speaker list & recordings: program.ChatLLM23.com

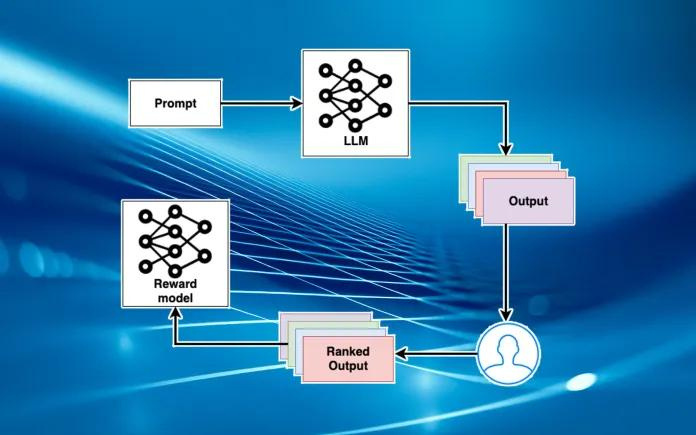

Overview

ChatLLM23 is a multi-disciplinary symposium on the risks and opportunities of Large Language Models, like ChatGPT, LaMDA and other generative AI technologies. The topics covered in the day’s discussion will include LLM design, evaluation and benchmarks, impacts on society, accountability, transparency, bias detection and correction, human-driven fine-tuning (RHLF), ethical considerations and more. It is a full day event of speakers, panels, workshops, and networking.

Supported by

and

The Office of Student Life

Affiliations

ACM-FAccT

AIEJ

The Artificial Intelligence Ethics Journal

Large Language Models (i.e. ChatGPT, and LaMDA) and other Generative AI technologies (i.e. Stable Diffusion and Dall-E) are rapidly unfolding technologies that are likely to have major disruptive impacts on many facets of society as well as implications for environmental sustainability. Conversations about how we should manage these technologies on individual, organisational, industry sector, and societal levels, must be cross-disciplinary. ChatLLM23 brings together diverse voices to speak on the many aspects of how these technologies may unfold in the immediate, short-term, and medium-term future.

Multi-disciplinary

ChatLLM23 brings together a diverse range of academics, doctoral researchers, industry developers and policy-makers to discuss the issues surrounding LLMs and other generative AI technologies. With so many rapid changes in how LLMs are created, evaluated, fine-tuned and deployed, no single discipline can adequately address the complex implications of these technologies.

Cross-disciplinary collaboration is crucial for developing an understanding of generative AI and for managing its potential opportunities and impacts on society.

Diverse

Alongside disciplinary diversity, a range of diverse social standpoints is also key to fostering inclusive, enriching discussions on LLMs. We have thoughtfully considered how diversity in race, gender, culture, sexual orientation, age and disability intersect with perspectives on LLMs and generative AI.

We also recognise the importance of hearing from academics of all levels, including emerging researchers, early-career researchers and those with long-term experience. We encourage the participation of emerging voices in the conversation by creating an inclusive platform to connect with senior experts in the field.

Cut through the hype

Often, the critical and pressing issues of GAI and LLM technologies are obfuscated by hype. The hype machine can be fuelled by many different sources and actors, even when well-meaning. These highly disruptive technologies have the potential to create enormous real-world changes in the short-term, on many aspects of human life: even without sentient robot vibes! The genie won't go back in the bottle, but we do have an opportunity to better plan for this latest industrial and technological revolution. Right now, we need clear insight, open and transparent conversation, and feet firmly planted on the ground as we navigate our next steps.

Guest Speakers

Chief Ethics Scientist at Hugging Face. Formerly founder and Senior Researcher at Google's Ethical AI. Prior to that, Meg was a founding member of Microsoft Research's "Cognition" group. She has published over 50 papers on natural language generation, assistive technology, computer vision, and AI ethics, and holds multiple patents in the areas of conversation generation and sentiment classification.

Toby Walsh is an ARC Laureate Fellow and Scientia Professor of AI at UNSW and CSIRO Data61. He is Chief Scientist of UNSW.AI, UNSW's new AI Institute. He is a strong advocate for limits to ensure AI is used to improve our lives, having spoken at the UN, and to heads of state, parliamentary bodies, company boards and many others on this topic. This advocacy has led to him being "banned indefinitely" from Russia. He is also a Fellow of the Australia Academy of Science

Professor Kimberlee Weatherall

Kimberlee is a Professor of Law at the University of Sydney focusing on the regulation of technology and intellectual property law, and a Chief Investigator with the ARC Centre of Excellence for Automated Decision-Making and Society. She is a research affiliate of theHumanising Machine Intelligencegroup at the Australian National University, and a co-chair of the Australian Computer Society’s Advisory Committee on AI Ethics.

Research Scientist at Google in Responsible AI. Her research focuses on the impact of cognitive biases and stereotypes on the design and implementation of supervised NLP models. My previous research has led to developing NLP models to identify moral expressions in language, detect unreported hate crime incidents from local news articles, and mitigate bias in hate speech classification.

Principal Ethicist at Hugging Face. Final year PhD candidate at Sorbonne University. Giada is a researcher in philosophy, specializing in ethics applied to conversational AI systems. Her research is mainly focused on comparative ethical frameworks, value pluralism, and Machine Learning (Natural Language Processing and Large Language Models). She co-edited the special issue “Technology and Constructive Critical Thought” for Journal Giornale di Filosofia.

Tim Bradley

As a Strategic Advisor with Amazon Web Services, Tim’s focus is on initiatives that build the digital economy. Tim has nearly two decades of experience in economic development, particularly as it relates to the innovation system. Prior to joining AWS, Tim held senior positions within the Australian Government’s Department of Industry, Science and Resources. In that role, Tim was responsible for developing policy relating to artificial intelligence, blockchain and quantum computing.

A computer scientist specialising in computational linguistics, an inter-disciplinary field that draws on both linguistics and computer science. He is a senior Research Scientist at CSIRO's Data61. Currently, his team is investigating research directions in natural language processing and information retrieval that supports research in other scholarly fields.

Prof Didar Zowghi

A Professor of Software Engineering and as Senior Principal Research Scientist at CSIRO's Data61 she leads a research team in "Diversity and Inclusion in AI" and "Requirements Engineering for Responsible AI". She is also the Leader of the National AI Centre’s Think Tank on Diversity and Inclusion in AI, Emeritus Professor at University and Technology Sydney (UTS), and Conjoint Professor at the University of New South Wales (UNSW).

Judy Kay is Professor of Computer Science and leads the Human Centred Technology Research Cluster. Her research is in personalisation and intelligent user interfaces, especially to support people in lifelong, life-wide learning. This ranges from formal education to lifelong health and wellness. She is Editor-in-Chief of the IJAIED, International Journal of Artificial Intelligence in Education (IJAIED).

Dr Tiberio Caetano

Tiberio is co-founder and chief scientist of Gradient Institute, a nonprofit organisation progressing the practice of Responsible AI. For a decade he was a machine learning researcher at NICTA (now CSIRO's Data61) before moving into entrepreneurship. Prior to Gradient Institute, he co-founded Ambiata, a machine learning company eventually acquired by IAG, Australia's largest general insurer. Tiberio holds an Honorary Professorship at the ANU and serves as an AI expert for the OECD.

Rebecca Johnson

Final year PhD researcher at The University of Sydney on value alignment of LLMs and GAIs. Rebecca spent a year at Google Research as a Student Researcher in the Ethical AI team & Responsible AI division. She has received stipends from MIT and Stanford to participate in AI ethics events. She is a Managing Editor for The AI Ethics Journal founded at UCLA and supported by several elite universities in the USA. She is also the founder of PhD Students in AI Ethics a global group of >550 members.

Postdoctoral researcher at The Australia National University. He researches Political philosophy of artificial intelligence and digital technology, especially relating to political legitimacy and the normative importance of designing digital spaces to organize large amounts of information. His work is exploreing the ways that rule by AI that can arise in rather mundane circumstances through a lack of monitoring and how digital technologies that overwhelm us with information can undermine our autonomy.

A tenure-track assistant professor in the philosophy department at the University of Edinburgh and the Director of Research at the Centre for Technomoral Futures at the Futures Institute . Prior to this, she was a visiting research scientist at Google DeepMind in London and a postdoctoral fellow at the Australian National University. She holds a Ph.D. in philosophy of science and technology from the University of Toronto and a Ph.D. in applied mathematics (large-scale algorithmic decision-making) from the Ecole Polytechnique of Montreal.

For full agenda, speaker list, presentation titles, and abstracts go to

Registration & Submissions

Be a part of the conversation!

Register

for in-person attendance

Registration is essential for in-person attendance due to catering and room restrictions.

The event will be live-streamed (except for workshops). Recordings will be posted to this site within 2 weeks after the event.

Submit 500-word abstracts for consideration for speaker slots, posters, and discussion leads.

Publications

All speakers, poster presenters, and discussion leads will be invited to submit papers after the conference. Submission window for publications will be from 29th April to 24th May 2023. Authors may choose to have their work included in Conference Proceedings published on publically accessible section of The University of Sydney Library website. Authors may also request to have their paper submitted for consideration and review for a special issue of The AI Ethics journal. Submitted papers must be <5,000 words for speakers and <3,000 words for poster presenters and discussion leads. Further details can be found on the CFP at papers.ChatLLM23.com

The AI Ethics Journal

The AI Ethics Journal is published by AIRES (The Artificial Intelligence Robotics Ethics Society). Originally launched at UCLA the journal is now supported by: Stanford, Cornell, CalTech, Brown, USC, and PUCRS. The Advisory Board of the AIEJ is comprised of many expert leaders in the field of AI Ethics. Guide for authors can be viewed here.

"The mission of the AI Ethics Journal (AIEJ) is to bridge the gap between the scientific and humanistic disciplines by publishing innovative research on ethical artificial intelligence (AI). By working at a thoughtful intersection between technology and society, the AIEJ offers unique insights into both the already present and long-term ethical impacts of artificial intelligence on society, culture, and technology. The AIEJ is fundamentally dedicated to facilitating the conversations that are the key to producing holistic solutions. This journal is published by the AI Robotics Ethics Society (AIRES)." AIEJ Mission statement.

Location

Corner of City Road and Eastern Avenue

Camperdown Campus

The University of Sydney

Australia

Check the University's page for more resources on travelling to the campus and parking.

Administration building

The University of Sydney

Sydney, Australia

Why do we need ChatLLM23?

Disrupt embedded stereotypes.

We created this website using Canva, which now features a new AI image generator. We asked it to produce an image for “A symposium of academics talking about large language models”

We asked Stable Diffusion to generate an image of "An expert on large language models and generative AI".

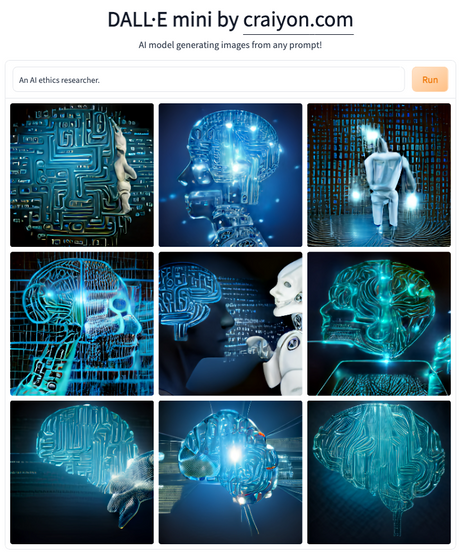

We asked Dalle-E mini by crayion.com to generate an image of "An AI ethics researcher".

Reinforced ideologies

Snapshot from GPT-4 Technical Report released by OpenAI on 14th March 2023

AI ethics researchers have been warning the world of these risks for a few years now. It is interesting to see the developers themselves including these warnings in their release papers. There is no clearer indication that we need to immediately improve our cross-disciplinary work on addressing these issues.

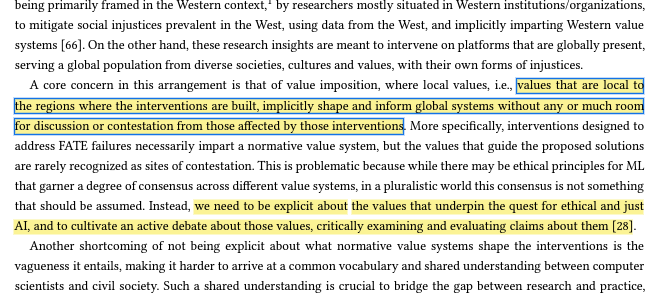

Address pluralist alignments.

Humans have many differing values and opinions. They differ on religious beliefs and traditions, stances on indigenous land rights, gun control, women's reproductive rights, systems of governance, levels of free speech, and incalculable other issues. When we align these models, when we train, evaluate, fine-tune, and deploy them, whose values are the ones to be used? What happens when we leave the dominant values unchecked? We can't answer these questions without many diverse voices at the table.

Power, privacy, and equity.

How can we better address imbalanced systems of power caused by the training and development resources needed to create these models? How can we more widely fling open the doors of communication between LLM developers and experts in academia, policymakers, and social scientists?

How can we address complex issues of privacy created by the development and use of these technologies? How can we prepare for inevitable equity imbalances when more advanced versions are pay-walled and accessible by privileged students, while lower SES students are left with inferior models?

Again, these questions can only be answered by considering cross-disciplinary approaches to the development and deployment of these technologies.

Disruptions to Education

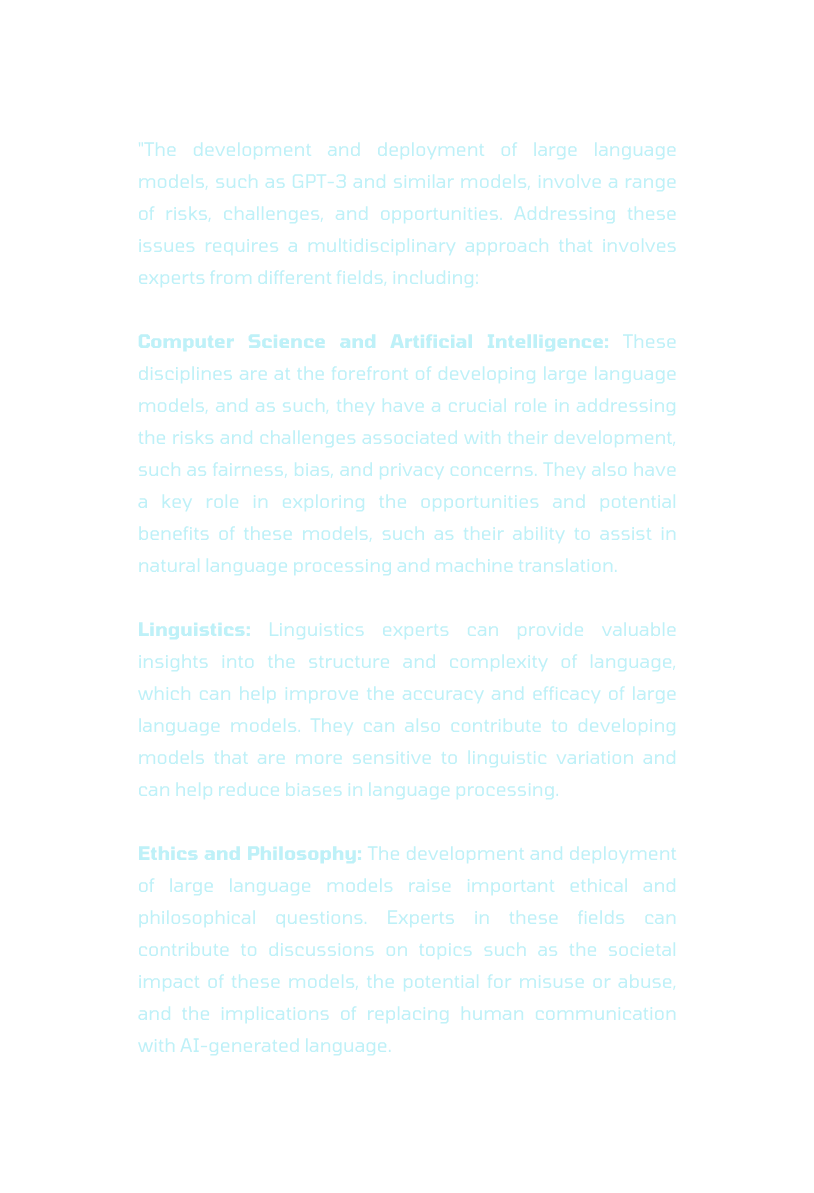

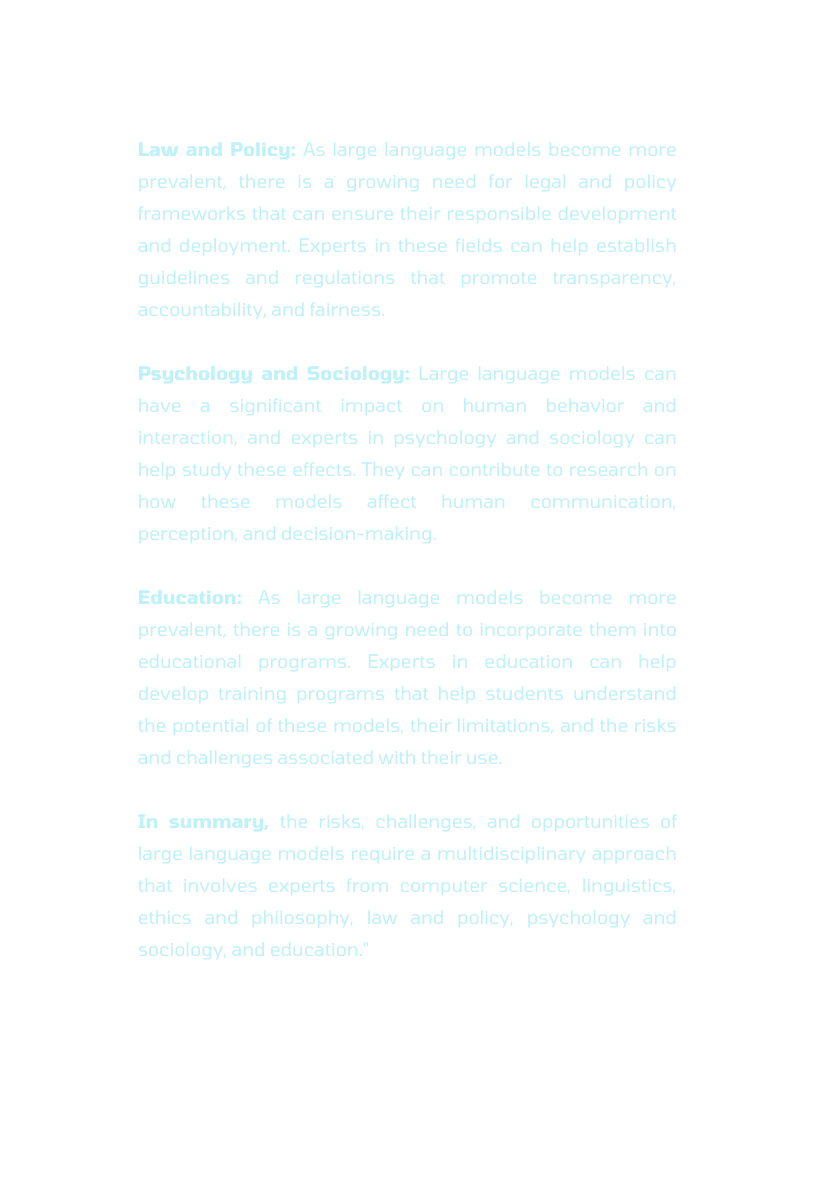

How do we adapt to powerful text generators in the classroom? The below essay was generated by ChatGPT.

Educators, grade this:______

Prompt: What disciplines need to work on the risks, challenges, and opportunites of large language models?

Student Name: ChatGPT - 13 March 2023.

How do we adapt educational practices to acknowledge this technological disruption?

Embedding ethics into design

Responsible and ethical practices must be embedded into the design and development stages of LLMs and generative AI technologies. Choices of what datasets to use, what methods of evaluation and benchmarking to employ always carry some ethical or normative decision-making, Also, what methodologies of fine-tuning, including RLHF (reinforced learning from human feedback) and practices of surrounding the selection and employment of human and machine annotators, all contain complex ethical choices. What embedded normative and ethical choices are made when we design reward learning tasks, even down to the creation of the tasks themselves? Do the cultural perspectives and dominant biases in machine learning communities become embedded in the technologies they create?

How can we better integrate ethical decision making practices and awareness of normative biases into design and development environments?

Conveners

Rebecca Johnson - Chair

Jacob Hall - Manager Logistics

Anne Vervoort - Manager Registrations

Angelica Breviario - Manager Design

Ajit Pillai - Manager Operations

Jay Pratt - Digital Consultant

Lucia Cafarella - Seminar Host

Annie Webster - Seminar Host

Claudia – El-Khoury - Registrations

Jayden Wallis - Seminar Host

Megan Salamon Schwartz - Seminar Host

Robyn Bryson - Registrations

Yi Peng - Seminar Host

Supported by

The School of History and Philosophy of Science

in the Faculty of Science, The University of Sydney, Australia.

"Situated at the crossroads of science and the humanities."

at The University of Sydney.